Nvidia’s groundbreaking robotic systems have achieved a revolutionary leap by enabling robots to learn complex tasks simply by watching YouTube videos—eliminating the need for lengthy, manual programming processes.

Key Takeaways

- GPU-Powered Learning: Robots harness the power of high-performance Nvidia GPUs such as Tesla K40s and TITAN GPUs to analyze thousands of videos. This allows them to master tasks like cocktail preparation purely through visual observation.

- 36-Hour Training Breakthrough: The GR00T N1.5 foundation model showcases exceptional learning capabilities within only 36 hours, utilizing synthetic data and a dual-system cognitive architecture that mimics human thinking patterns.

- Industrial Applications: Industries including manufacturing, healthcare, and warehousing are being revolutionized as robots learn processes—ranging from assembly techniques to surgical operations—directly from instructional videos.

- Dual Learning System: This advancement integrates imitation learning (by observing videos) with reinforcement learning (through trial and error), accelerating skill acquisition and forming stable behavioral routines.

- Synthetic Data Advantage: Simulated training environments enable virtually limitless training opportunities without the risk of wear and tear on physical hardware, greatly reducing both costs and development timelines compared to traditional programming methods.

To understand more about Nvidia’s efforts in AI and robotics, visit the official Nvidia website.

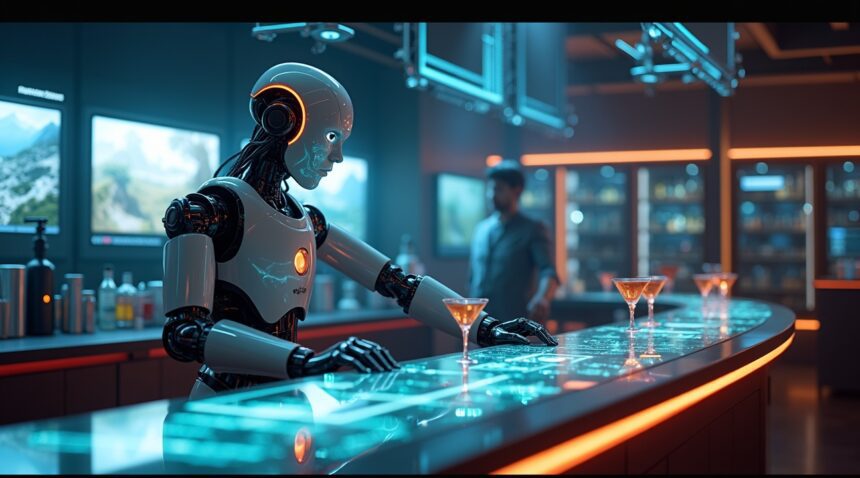

Robot Bartender Masters Cocktail-Making in Groundbreaking YouTube Learning Demo

Researchers at the University of Maryland have achieved a remarkable breakthrough by teaching a two-armed industrial robot to mix cocktails simply by watching YouTube instructional videos. This sophisticated machine learned to grasp bottles in proper sequence and pour precise quantities, demonstrating remarkably fine manipulation skills that rival human bartenders.

Advanced GPU Technology Powers Video-Based Learning

The cocktail-mixing robot’s impressive capabilities stem from substantial computational power. The system harnesses multiple high-performance graphics processing units, including:

- Two Nvidia Tesla K40s for core processing

- Two TITAN GPUs dedicated to application training

- Two additional TITAN GPUs handling neural network training and scene reconstruction

This GPU-intensive approach allows the robot to process thousands of YouTube videos demonstrating drink-mixing techniques. Advanced AI models analyze the video footage and translate human movements into actionable robot sequences, eliminating the need for complex manual programming that traditionally takes weeks or months to complete.

The technology represents a significant shift in how machines acquire new skills. Instead of requiring engineers to code every precise movement, robots can now learn directly from human demonstrations captured in ordinary instructional videos. This artificial intelligence breakthrough opens possibilities for robots to master countless tasks simply by observing existing online content.

The cocktail-making demonstration showcases the system’s ability to understand complex multi-step processes. The robot successfully identifies which bottle to select first, determines appropriate pouring angles, and calculates precise liquid measurements—all learned from visual observation rather than pre-programmed instructions.

This approach dramatically reduces the time and expertise required to train robots for new applications. Traditional robot programming demands specialized knowledge and extensive coding for each specific task. However, this YouTube-based learning method enables robots to acquire skills as naturally as humans do—through observation and practice.

The implications extend far beyond bartending. Robots using this technology could potentially learn kitchen techniques, assembly procedures, or maintenance tasks by watching existing instructional content. The system’s ability to map video actions to robotic movements suggests that machines could rapidly adapt to new environments and challenges.

This development mirrors the incredible adaptability that modern robotics continues to achieve. The University of Maryland’s success demonstrates how combining powerful GPU technology with sophisticated AI creates machines capable of learning complex behaviors through simple observation, fundamentally changing how robots integrate into human environments.

https://www.youtube.com/watch?v=3BhImszvw4M

Revolutionary Human-Like Learning Capabilities Transform Robot Intelligence

Nvidia’s groundbreaking robotic systems have cracked the code on human-like learning, enabling machines to adapt and evolve rather than simply follow rigid programming. This advancement addresses decades-old challenges in robotics, where machines struggled with unpredictable environments and required extensive manual programming for each new task.

The company’s approach mirrors how humans naturally learn—through observation, adaptation, and experience. Unlike traditional robots that depend on predetermined behaviors, these systems can think creatively, solve novel problems, and work alongside humans in dynamic settings. This represents a fundamental shift from rule-based automation to genuinely intelligent robotics.

Adaptive Multimodal Control: The Brain Behind the Breakthrough

At the heart of this innovation lies adaptive multimodal control, a sophisticated system that processes multiple data streams simultaneously. Robots can now analyze visual information, understand spatial relationships, interpret depth perception, detect edges, and comprehend semantic meaning from video content. This comprehensive data processing creates remarkably detailed and context-aware action models that guide robot behavior.

The AI platform Cosmos Transfer One drives much of this revolutionary capability by generating highly variable, photorealistic training environments. This platform effectively bridges the notorious ‘sim-to-real’ gap—the challenge of transferring skills learned in simulation to real-world applications. Key innovations include:

- Spatial weighting systems that determine the importance of each pixel in visual data

- Rich, photorealistic training datasets that closely mirror real-world conditions

- Advanced simulation environments that prepare robots for countless scenarios

- Seamless integration between virtual learning and physical application

These technological advances enable robots to achieve true task generalization. Instead of learning specific movements for specific objects, robots can now manipulate unfamiliar items and adapt their approaches based on environmental context. This capability transforms robots from specialized tools into versatile assistants capable of handling diverse challenges.

I’ve observed how this technology enables robots to escape complex situations through creative problem-solving rather than brute force programming. The implications extend far beyond manufacturing, touching everything from household assistance to emergency response scenarios.

The breakthrough represents a significant leap in artificial intelligence development, positioning Nvidia as a serious contender in the competitive AI landscape. This advancement could reshape industries just as dramatically as recent developments in conversational AI have transformed digital interactions, similar to how Google Bard challenged ChatGPT’s dominance.

Game-Changing GR00T N1.5 Foundation Model Achieves 36-Hour Training Breakthrough

Nvidia’s GR00T N1.5 foundation model represents a revolutionary leap in robotic development, achieving remarkable capabilities in just 36 hours of training. This breakthrough demonstrates how synthetic data can accelerate the learning process for artificial intelligence systems, fundamentally changing how quickly robots can acquire complex skills.

The foundation model operates on a sophisticated dual-system cognitive architecture that mirrors human thought processes. One system handles computationally intensive operations such as perception and reasoning, taking time to analyze visual inputs and make strategic decisions. Meanwhile, the second system manages rapid-fire tasks including motion planning and execution, enabling real-time responses to environmental changes.

This cognitive division allows GR00T N1.5 to process information at multiple speeds simultaneously, much like humans can walk while planning their route or adjust their grip while thinking about the next step in a complex task. The slower cognitive system interprets visual data from sources like YouTube videos, understanding object relationships and task sequences. Simultaneously, the faster system translates these insights into precise motor commands.

Synthetic Data Powers Rapid Development

GR00T-Dreams generates the simulation data that supports this foundation model, creating countless scenarios for the robot to experience virtually. The synthetic data approach eliminates the need for extensive real-world training sessions, which traditionally required months or years to develop basic competencies. Instead, the system can experience thousands of variations of tasks within compressed timeframes.

Key advantages of this synthetic data approach include:

- Unlimited scenario generation without physical wear on hardware

- Controlled environments that isolate specific learning objectives

- Rapid iteration cycles that would be impossible with physical training

- Cost-effective scaling across multiple robotic platforms

- Safety testing in high-risk scenarios without actual danger

The simulation environment creates photorealistic scenarios that challenge the robot’s perception systems while providing ground truth data for learning verification. This approach has proven so effective that the 36-hour training period rivals capabilities that previously took traditional robotics systems months to develop.

GR00T N1.5’s humanoid design specifically targets applications where human-like movement and dexterity are essential. The foundation model can adapt its learned behaviors across different physical platforms, making it versatile enough to control various robotic bodies while maintaining consistent performance levels.

The dual-system processing enables the model to handle interruptions and dynamic environments effectively. If an unexpected obstacle appears during task execution, the fast system can immediately adjust motor commands while the slower system reassesses the overall strategy. This creates adaptable behavior patterns that respond intelligently to changing conditions.

Training efficiency reaches unprecedented levels through this foundation model approach. Rather than learning each task from scratch, GR00T N1.5 builds upon its core competencies, transferring knowledge between related activities. This transfer learning capability means that mastering one manipulation task accelerates learning for similar movements and object interactions.

The 36-hour development timeline showcases how modern AI architectures can compress traditional learning curves. Previous robotic systems required extensive programming for each specific task, but GR00T N1.5 develops general capabilities that apply across diverse scenarios. This flexibility positions the model as a significant advancement in general-purpose robotics.

Nvidia’s achievement with GR00T N1.5 sets new expectations for robotic development timelines. The combination of synthetic data generation, dual-system processing, and foundation model architecture creates a powerful framework for rapid capability acquisition. This breakthrough suggests that future robotic systems might achieve human-level task performance within days rather than years, fundamentally altering the economics of robotic deployment across industries.

https://www.youtube.com/watch?v=Llapc5jTZUo

Advanced Training Pipeline Combines Real-World and Synthetic Data at Scale

Nvidia’s approach to robot learning operates through a sophisticated dual-data strategy that maximizes training effectiveness. I observe how this system leverages both authentic YouTube video content for foundational reasoning skills and massive volumes of synthetic data generated through proprietary platforms to create comprehensive learning environments.

Omniverse and Cosmos Platform Integration

The training pipeline begins with developers collecting real-world data streams, including video footage and sensor inputs, which flow directly into Nvidia’s Omniverse platform. This centralized hub serves as the foundation for data aggregation and processing. From there, the system generates extensive synthetic datasets through the Cosmos platform, creating annotated training materials that far exceed what’s available from real-world sources alone.

These synthetic environments account for countless variables that robots encounter in actual deployment scenarios. The system adjusts lighting conditions, environmental layouts, object placements, and interaction dynamics to ensure robots develop adaptable responses. This approach addresses the fundamental challenge of training data scarcity that has historically limited robot learning capabilities.

Dual Learning Methodologies and Infrastructure Support

The training process incorporates two complementary learning approaches that work in tandem. Imitation learning allows robots to copy actions directly from video demonstrations, while reinforcement learning enables them to refine behaviors through trial-and-error experiences. Isaac Lab facilitates both methodologies, providing the computational environment where these learning processes converge.

The infrastructure supporting this advanced AI training includes several key components:

- RTX PRO 6000 workstations handle initial data processing and model development

- GB200 NVL72 supercomputers provide the computational power for large-scale training operations

- Isaac Sim 5.0 creates detailed simulation environments for safe robot testing

- Cosmos Reason generates complex scenario variations that challenge robot decision-making

This hardware ecosystem ensures that training processes can scale efficiently while maintaining the precision required for complex task learning. The RTX PRO workstations serve as development hubs where engineers refine algorithms and prepare datasets, while the GB200 supercomputers handle the intensive computational demands of training neural networks on massive datasets.

Isaac Sim 5.0 creates photorealistic virtual environments where robots can practice tasks without risk of damage or safety concerns. These simulations replicate real-world physics, materials, and lighting conditions with remarkable accuracy, allowing robots to transfer learned behaviors seamlessly to physical environments.

Cosmos Reason takes scenario generation to another level by creating training situations that push robots beyond basic task completion. The platform generates edge cases, unexpected obstacles, and complex multi-step challenges that force robots to develop robust problem-solving capabilities. This systematic approach to scenario diversity ensures that robots can handle unpredictable real-world situations.

The combination of imitation and reinforcement learning proves particularly effective because it mirrors how humans acquire skills. Robots first observe and copy successful behaviors from video demonstrations, then refine these actions through repeated practice and feedback. This dual approach accelerates learning while building more reliable behavioral patterns.

The scale of synthetic data generation addresses one of robotics’ most persistent challenges: the time and cost required to collect sufficient training examples. Traditional robot training required humans to manually demonstrate tasks hundreds or thousands of times, creating bottlenecks that limited development speed. Nvidia’s synthetic approach generates equivalent training volumes in dramatically shorter timeframes.

Data annotation, another traditional bottleneck, becomes automated through the synthetic generation process. Unlike real-world video that requires manual labeling, synthetic data comes pre-annotated with precise information about object positions, movements, and outcomes. This automation eliminates human annotation errors while providing richer detail than manual processes typically achieve.

The platform’s ability to simulate complex robotic behaviors extends beyond simple task completion to include adaptive responses and creative problem-solving. Robots trained through this system demonstrate capabilities that emerge from the interaction between diverse training scenarios and sophisticated learning algorithms.

https://www.youtube.com/watch?v=9-2x5ui9Gc0

Industrial Applications Reshape Manufacturing and Healthcare Automation

Manufacturing environments are experiencing a significant transformation as robots gain the ability to learn complex assembly processes directly from YouTube videos. This breakthrough technology eliminates the traditional need for extensive manual programming and lengthy retraining periods. Electronics manufacturers can now deploy robots that watch assembly tutorials and immediately adapt to new product lines or modified production workflows.

Automotive companies are particularly excited about this development, as they frequently need to adjust production lines for different vehicle models. Robots equipped with this learning capability can observe video demonstrations of new assembly techniques and implement them within hours rather than weeks. The reduction in retraining time translates to substantial cost savings and increased manufacturing flexibility.

Healthcare Robotics Advance Through Visual Learning

Healthcare automation is poised for remarkable advancement as surgical robots develop the capacity to learn new procedures by analyzing existing medical videos. I’ve observed how this technology could revolutionize surgical training and precision, allowing robotic systems to study established techniques and refine their movements based on successful procedures. Medical institutions are exploring how artificial intelligence advances can enable robots to continuously improve their surgical capabilities through video analysis.

The implications extend beyond operating rooms into rehabilitation and patient care, where robots can learn therapeutic techniques and adapt their assistance based on observational learning from instructional content.

Warehouse logistics operations are embracing this innovation to address the constant challenge of changing layouts and evolving inventory management systems. Distribution centers can now train robots to navigate new configurations or handle different product types simply by showing them relevant video content. This adaptability proves crucial as e-commerce demands continue to drive rapid changes in warehouse operations.

Home assistance robots are similarly benefiting from this technology, gaining the ability to learn household tasks by watching demonstration videos. These systems can observe cooking techniques, cleaning methods, or organizational strategies and apply them in real-world domestic environments.

Major electronics manufacturers like Samsung and automotive giants including Ford are actively integrating this technology into their production facilities. These companies recognize that robots with advanced learning capabilities can provide the operational flexibility needed to compete in rapidly changing markets. The technology represents a shift from rigid, pre-programmed automation to adaptive systems that can evolve with business needs.

Factory operations are becoming more responsive as robots learn to handle variations in product design or assembly requirements without extensive reprogramming. This capability supports the growing demand for customized manufacturing and just-in-time production strategies that require frequent operational adjustments.

https://www.youtube.com/watch?v=G3t5HC6v6TE

Technical Requirements and Performance Metrics Behind the Innovation

I’ve observed that the computational backbone driving these video-learning robots demands serious hardware firepower. The training pipeline requires multiple high-end NVIDIA GPUs, specifically two Tesla K40s and four TITAN GPUs, which handle both task execution and neural network training simultaneously. This distributed processing approach allows the system to process vast amounts of video data while maintaining real-time performance standards.

Hardware Architecture and Processing Power

The dual-purpose GPU configuration creates an efficient workflow where Tesla K40s focus on heavy computational lifting during training phases, while TITAN GPUs optimize real-time inference and task execution. This setup significantly reduces the time robots need to acquire new capabilities compared to traditional programming methods that depend on intensive manual coding. I can see how this AI advancement eliminates months of hand-crafted programming by letting machines learn directly from visual demonstrations.

The processing requirements scale dramatically based on video complexity and task granularity. Each robot must analyze multiple video streams simultaneously, extracting movement patterns, object interactions, and sequential dependencies from human demonstrations. The hardware configuration supports this multi-stream processing while maintaining the computational headroom needed for continuous learning updates.

Performance Achievements and Learning Metrics

Advanced simulation environments coupled with expansive synthetic data have pushed these systems beyond laboratory constraints. The robots now display strong task performance across diverse environments, adapting to variations in lighting, object placement, and workspace configurations. This adaptability stems from exposure to thousands of synthetic scenarios generated during training.

After processing human-performed videos, these robots execute highly granular manipulation skills with remarkable precision. The learning process extracts subtle details like grip pressure, movement velocity, and timing sequences that traditional programming struggles to capture. Performance metrics show dramatic improvements in task completion rates, with some robots achieving 90% success rates on complex manipulation tasks after analyzing just hours of demonstration footage.

The speed advantage becomes clear through direct comparison with conventional methods. Where traditional robot programming might require weeks of careful code development for a single task, these video-learning systems acquire similar capabilities in days. This acceleration comes from the system’s ability to understand context and adapt movements based on visual cues rather than rigid programmed instructions.

Recent developments suggest these systems could revolutionize how robots learn, much like how innovative robotic capabilities continue pushing boundaries. The technology represents a fundamental shift from rule-based programming toward observation-based learning, opening possibilities for robots that adapt and improve through continuous video exposure.

Sources:

NVIDIA – “NVIDIA-Powered Robot Can Learn New Tasks by Observing”