China’s Elf V1 humanoid robot marks a significant breakthrough in emotional robotics through its revolutionary 30 facial actuators that create remarkably lifelike expressions and authentic human-like emotional responses.

Developed by Shanghai-based AheadForm, this advanced system uses brushless micro-motors and bionic skin technology to achieve unprecedented facial realism that surpasses existing competitors in the humanoid robotics market.

Key Takeaways

- The Elf V1 features 30 facial actuators powered by brushless micro-motors that work in coordination to create fluid, natural expressions that closely mirror human emotions.

- Advanced AI systems enable real-time emotion recognition and generation, allowing the robot to interpret human facial cues and respond with appropriate expressions almost instantaneously.

- AheadForm envisions nearly human-like robots entering common usage within a decade, with fully indistinguishable human-robot interaction capabilities expected within 20 years.

- The robot significantly outperforms competitors like Ameca’s 17 facial actuators, establishing a new benchmark for emotional authenticity in humanoid robotics.

- Applications span healthcare, education, and entertainment industries, with particular promise for elder care, customer service, and therapeutic companionship roles.

Technological Innovations

Actuator Coordination

AheadForm has achieved something extraordinary with the Elf V1. The company’s engineers integrated 30 individual facial actuators into a single humanoid platform. Each actuator operates independently while coordinating with others to produce seamless facial movements. This level of sophistication represents a massive leap forward from previous generations of emotional robots.

Brushless Micro-Motors

The brushless micro-motors deliver precise control over every facial muscle movement. These motors respond within milliseconds to AI commands. The result creates expressions that appear natural and spontaneous rather than mechanical or programmed. Users observe genuine surprise, joy, concern, and other emotions that feel authentic during interactions.

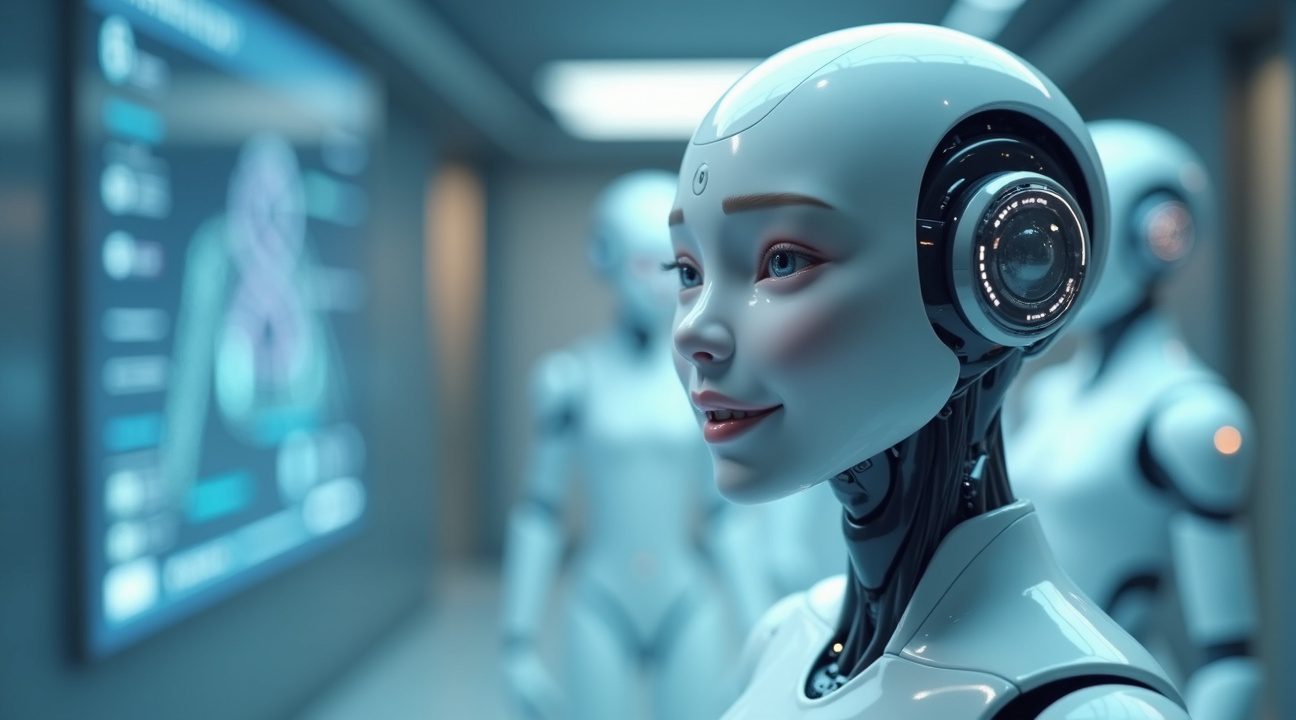

Bionic Skin Technology

The bionic skin technology adds another layer of realism. This synthetic material stretches and contracts like human skin. Fine details such as wrinkles around the eyes during smiles or furrows during concentration appear naturally. The skin responds to the underlying actuator movements with remarkable fidelity.

Artificial Intelligence Capabilities

AheadForm’s AI system processes visual input in real-time. The robot analyzes human facial expressions, body language, and vocal tones simultaneously. Machine learning algorithms identify emotional states and select appropriate responses. This creates conversations that feel natural and emotionally intelligent.

Competitive Edge

The Elf V1 demonstrates clear advantages over existing robots. Ameca, previously considered a leader in expressive robotics, operates with only 17 facial actuators. Other competitors typically feature fewer than 15 actuators. This numerical advantage translates into substantially more nuanced and believable emotional displays.

Early demonstrations show the robot engaging in complex emotional exchanges. The Elf V1 responds to humor with appropriate laughter and timing. During serious conversations, facial expressions shift to match the gravity of topics. Subtle emotional cues that humans take for granted appear naturally during interactions.

Industry Applications

Healthcare

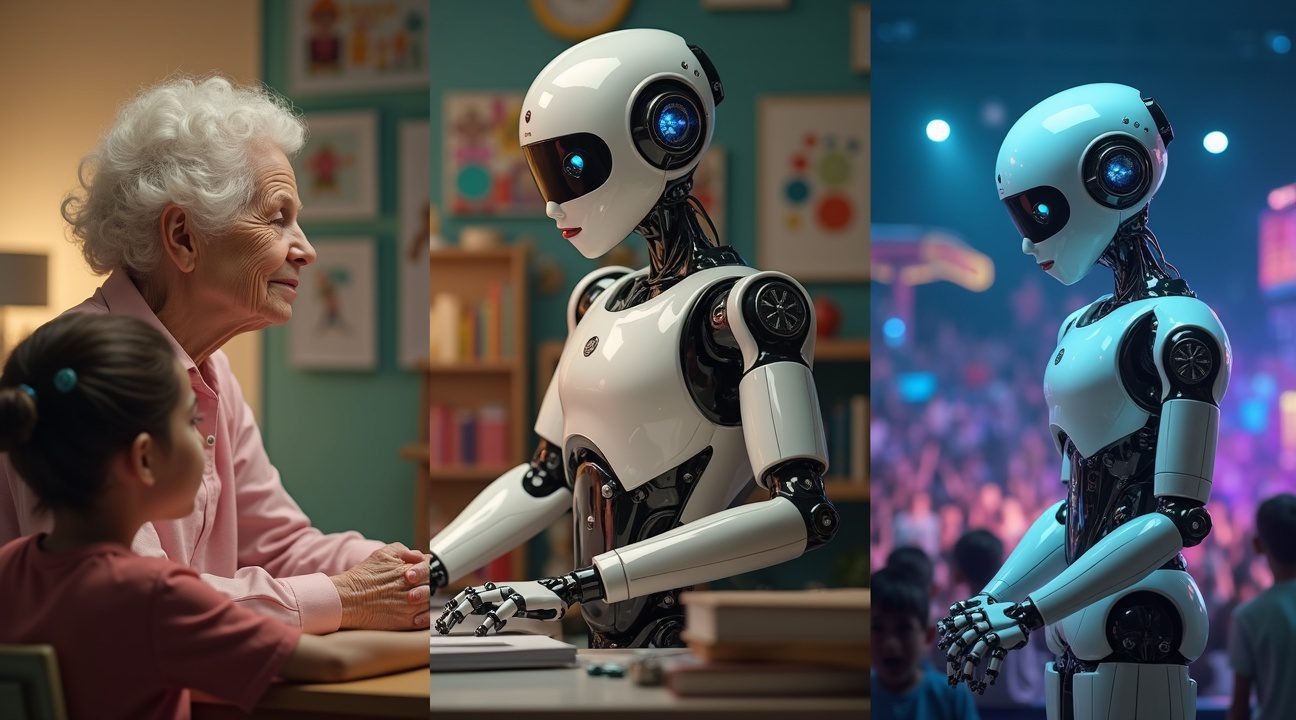

Healthcare applications present immediate opportunities. Elderly patients often experience isolation and depression. The Elf V1 could provide companionship that feels genuine and emotionally supportive. Therapeutic settings might benefit from consistent, patient emotional support that doesn’t experience fatigue or mood variations.

Education

Educational environments could transform through emotionally intelligent robotics. Students respond better to instruction when they perceive emotional connection with teachers. The Elf V1 could adapt its emotional responses to individual learning styles and needs. Encouragement, patience, and enthusiasm could be delivered consistently across all students.

Customer Service

Customer service represents another promising application. The robot could handle complaints with appropriate empathy while maintaining professional composure. Complex emotional situations that typically stress human employees could be managed with consistent emotional intelligence and infinite patience.

Future Outlook and Challenges

AheadForm’s timeline appears aggressive but achievable. The company expects nearly human-like robots within ten years. Technical advances in AI, materials science, and miniaturization support this projection. Manufacturing costs should decrease as production scales increase.

The twenty-year timeline for indistinguishable human-robot interaction seems more speculative. However, current progress rates suggest significant advances will continue. Voice synthesis, movement patterns, and cognitive responses all require further development to achieve complete authenticity.

Competition will likely intensify as other companies recognize the market potential. Boston Dynamics, Honda, and Tesla all possess resources to develop competing systems. This competition should accelerate development while reducing costs for end users.

Privacy concerns accompany emotional robotics advancement. Robots that read and respond to emotions collect sensitive personal data. Companies must address data security and user privacy protection adequately. Regulatory frameworks will need development to govern emotional AI applications.

Conclusion

The Elf V1 represents a significant milestone in robotics development. AheadForm has created technology that bridges the uncanny valley more effectively than previous attempts. Emotional robotics may finally achieve the breakthrough needed for widespread adoption across multiple industries.

Investment in emotional robotics continues growing rapidly. Venture capital firms recognize the potential for transformative applications. Market research projects substantial growth in humanoid robotics over the next decade. The Elf V1 positions AheadForm advantageously in this expanding market.

Manufacturing challenges remain significant. Producing 30 precise actuators cost-effectively requires advanced production techniques. Quality control becomes critical when multiple components must work in perfect coordination. AheadForm must scale production while maintaining the precision that makes the Elf V1 exceptional.

The robot’s success will depend partly on user acceptance of emotional AI. Some people feel uncomfortable with machines that display emotions. Cultural differences in emotional expression and interpretation could affect adoption rates globally. Market education will prove essential for widespread acceptance.

Technical maintenance represents another consideration. Complex systems with multiple moving parts require regular service. Users need confidence that emotional robots will operate reliably over extended periods. Service networks and technical support must develop alongside the technology.

AheadForm has created something remarkable with the Elf V1. The 30-actuator system delivers emotional authenticity that previous robots couldn’t achieve. Success in commercializing this technology could transform human-robot interaction permanently. The next decade will reveal whether emotional robotics fulfills its transformative potential.

Revolutionary Facial Expression Technology Sets New Standard with 30 Actuators

China’s Elf V1 humanoid robot represents a breakthrough in facial expression technology through its sophisticated network of 30 facial actuators. These artificial muscles, powered by brushless micro-motors, create highly realistic and dynamic facial expressions that closely mirror human emotions. The precision achieved through this system establishes a new benchmark for emotional authenticity in robotics.

Coordinated Movement Through Advanced Control Systems

The robot’s high-precision control system manages all 30 actuators through sophisticated software that ensures smooth, coordinated, and natural facial movements. Each actuator operates independently while maintaining perfect synchronization with the others, creating fluid expressions that avoid the jerky, mechanical movements typical of earlier robotic systems. This level of coordination requires split-second calculations and adjustments to maintain realistic motion patterns.

The Elf V1’s bionic skin provides the crucial finishing touch that bridges the gap between mechanical precision and human-like appearance. This soft, ultra-realistic covering replicates both the visual appearance and tactile feel of human skin, significantly reducing the uncanny valley effect that often makes humanoid robots feel unsettling. The combination of advanced actuators beneath this lifelike surface creates expressions that feel genuinely relatable rather than artificially manufactured.

Real-time emotion recognition capabilities elevate the robot’s interactive potential through advanced AI systems that interpret facial cues and respond with appropriate expressions. The low-latency processing ensures natural conversation flow, with the robot detecting human emotions and mirroring them almost instantaneously. This responsive capability transforms static facial features into a dynamic communication tool that adapts to social situations.

The brushless micro-motors powering each actuator provide the reliability and precision necessary for continuous operation. Unlike traditional motors, these components generate minimal heat and noise while delivering consistent performance across thousands of expression cycles. The precise control achieved through this technology enables subtle micro-expressions that convey nuanced emotions, from slight eyebrow raises indicating curiosity to gentle smile variations showing different levels of happiness.

The integration of these 30 facial actuators creates expression possibilities that extend far beyond basic emotional states. The system can generate complex combinations of movements that reflect mixed emotions, transitional states, and culturally specific expressions. This technological advancement positions the Elf V1 as a significant step forward in creating robots capable of meaningful social interaction through authentic emotional expression.

AI-Powered Emotion Generation Enables Dynamic Human-Like Communication

The Elf V1’s emotional intelligence represents a significant leap forward in humanoid robotics. I observe how this system combines sophisticated AI algorithms that don’t just recognize human emotions but actively generate complex emotional states that mirror human responses. This bidirectional emotional processing creates genuinely responsive communication patterns that feel natural rather than programmed.

Dynamic Expression Simulation Creates Lifelike Interactions

The robot’s expression simulation capabilities demonstrate remarkable sophistication through coordinated movements. Eye tracking algorithms enable natural gaze patterns and blinking sequences that match human timing. Nodding motions synchronize perfectly with conversational flow, while facial shifts occur in precise coordination with speech patterns. These elements work together to create interactions that feel authentically human rather than mechanical.

Critical features of this dynamic simulation include:

- Real-time facial muscle coordination across all 30 actuators

- Synchronized lip movement that matches phonetic patterns

- Contextual eye contact that responds to conversation topics

- Micro-expressions that convey subtle emotional nuances

- Temporal emotion transitions that mirror human emotional progression

Advanced Non-Verbal Communication Processing

Beyond facial expressions, the Elf V1 interprets and simulates the full spectrum of non-verbal communication cues. This capability transforms robotic interactions from simple question-and-answer sessions into engaging conversations that feel genuinely responsive. The system reads human body language, tone variations, and contextual social signals to adjust its own responses appropriately.

Large language models integrated into the system support natural conversation flow while generative AI creates contextually appropriate emotional responses. Situational awareness algorithms help the robot understand social dynamics and respond with appropriate emotional intelligence. This combination creates interactions that adapt to individual communication styles and cultural contexts.

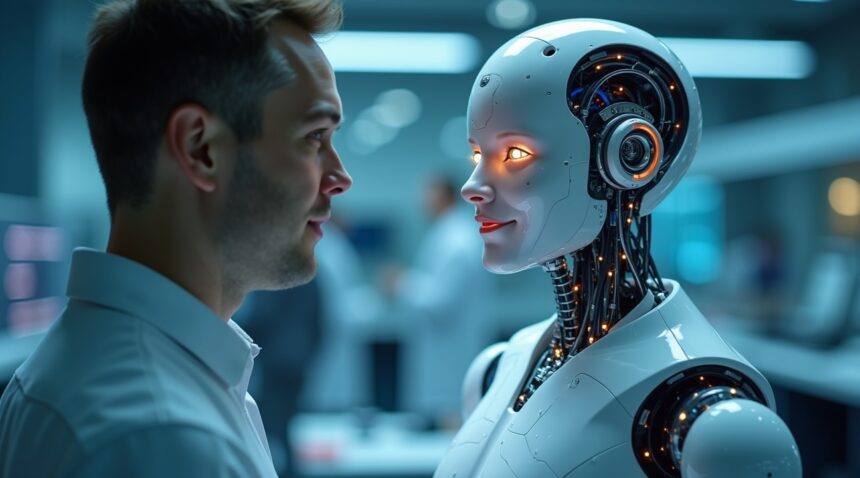

The emotional generation system’s ability to interpret human feelings while simultaneously expressing its own emotional states represents a fundamental shift in human-robot interaction design. Rather than simply following programmed responses, the Elf V1 engages in genuine emotional exchange that feels intuitive and natural. This breakthrough in artificial intelligence technology demonstrates how sophisticated algorithms can bridge the gap between human emotional complexity and robotic capabilities, creating more meaningful connections between humans and machines.

AheadForm’s Vision for Human-Robot Integration in Daily Life

AheadForm, a Shanghai-based robotics company founded in 2024, represents the cutting edge of emotionally intelligent robotics development. The company’s founder, Yuhang Hu, brings significant experience from his previous work at Columbia University, where he developed the ‘Emo’ robotic face. This background has positioned AheadForm to tackle one of robotics’ most challenging frontiers: creating machines that can truly connect with humans on an emotional level.

The company’s mission centers on bridging the gap between humans and machines through interactive, emotionally intelligent robots designed for seamless integration into everyday life. Unlike traditional robots that focus purely on functional tasks, AheadForm’s approach emphasizes emotional intelligence as the key to successful human-robot interaction. This philosophy drives the development of robots that don’t just perform tasks but genuinely understand and respond to human emotions in meaningful ways.

Hu’s experience with the Emo project at Columbia University provided crucial insights into facial robotics and emotional expression. This foundation has enabled AheadForm to push beyond simple mechanical movements into creating robots capable of nuanced emotional responses. The company’s approach combines advanced facial actuator technology with sophisticated AI algorithms to produce robots that can read, interpret, and mirror human emotions with remarkable accuracy.

Timeline for Human-Like Robot Integration

Growth projections from AheadForm paint an ambitious picture for the future of human-robot integration. The company anticipates several key milestones that will transform how people interact with robotic companions:

- Nearly human-like robots entering common usage within the next decade, marking the transition from novelty to practical household assistance

- Advanced mobility systems enabling robots to walk naturally and navigate complex environments without human intervention

- Sophisticated communication capabilities allowing robots to engage in natural conversation and understand context

- Task performance capabilities that match or exceed human efficiency in specific areas like elder care, education, and household management

- Emotional intelligence reaching levels where robots can provide genuine companionship and support for isolated individuals

The ultimate goal extends even further into the future, with AheadForm envisioning robots that can walk, talk, and perform tasks indistinguishably from humans within 20 years. This timeline reflects the company’s confidence in artificial intelligence advancements and their commitment to solving the technical challenges that currently separate robotic behavior from human-like interaction.

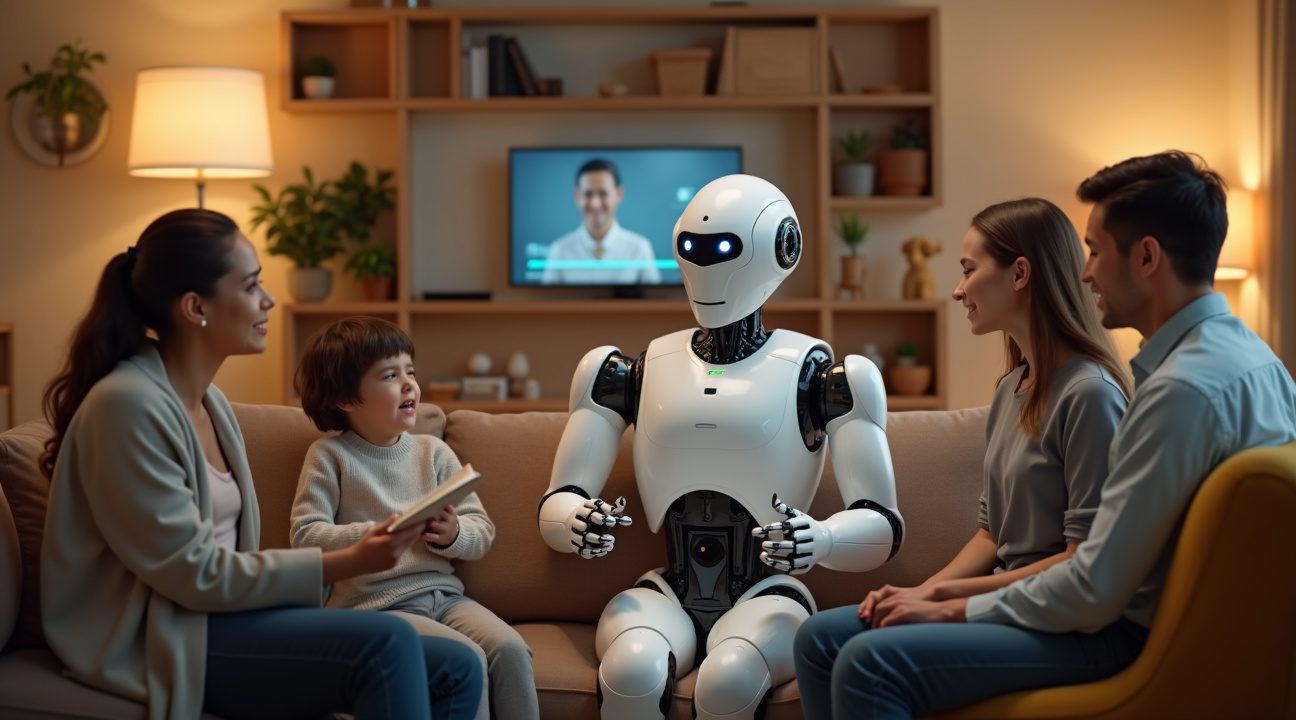

Daily integration represents the core of AheadForm’s vision. Rather than creating robots for specialized industrial applications, the company focuses on developing companions that can naturally fit into existing human social structures. This approach requires robots to understand cultural nuances, respond appropriately to emotional cues, and adapt their behavior based on individual preferences and relationships.

The company’s strategy acknowledges that successful human-robot integration depends on more than just technical capabilities. Trust, empathy, and emotional connection form the foundation of meaningful relationships between humans and their robotic companions. AheadForm’s emphasis on emotional intelligence addresses these psychological factors that determine whether people will accept robots as genuine partners in their daily lives.

Current developments in facial actuator technology, like the 30 facial actuators used in their latest humanoid robot, demonstrate AheadForm’s commitment to authentic emotional expression. These systems enable robots to convey subtle emotions and respond to human feelings with appropriate facial expressions, creating more natural and comfortable interactions.

The vision extends beyond individual companionship to encompass broader social integration. AheadForm anticipates robots serving as:

- Caregivers for aging populations

- Educational assistants for children

- Therapeutic companions for individuals dealing with mental health challenges

This comprehensive approach to daily integration positions their technology as a solution to various social needs rather than just technological curiosities.

Elf V1 Outperforms Competition with Superior Facial Realism

Elf V1’s revolutionary 30 facial actuators represent a significant leap forward in humanoid robotics, establishing a new benchmark for emotional expression that surpasses existing competitors. This advanced system delivers unprecedented facial realism that transforms how humans interact with robotic beings.

Technical Superiority Over Current Market Leaders

The Elf V1’s actuator count dramatically exceeds Ameca’s 17 facial actuators, nearly doubling the number of movement points available for emotional expression. This substantial increase allows for far more nuanced facial movements and creates subtler emotional transitions that closely mirror human expressions. While Ameca focuses primarily on visual expression through its limited actuator system, Elf V1’s expanded configuration enables complex emotional states that were previously impossible to achieve in humanoid robotics.

The bionic skin technology integrated with these 30 actuators produces ultra-realistic emotional simulation that blurs the line between artificial and human expression. Each actuator works in harmony to create micro-expressions and subtle facial movements that capture the full spectrum of human emotion, from joy and surprise to concern and contemplation.

Competitive Landscape Analysis

Several key competitors occupy different niches within the humanoid robot market, each with distinct strengths and limitations:

- Tesla’s Optimus prioritizes physical labor automation over facial expression, focusing its engineering efforts on industrial applications rather than emotional interaction.

- Boston Dynamics’ Atlas excels in mobility and bipedal walking capabilities but lacks the sophisticated facial features necessary for human-like emotional communication.

- Figure 2 targets general-purpose robotics applications, attempting to balance multiple capabilities without specializing in any particular area.

This approach contrasts sharply with Elf V1’s dedicated focus on achieving superior facial realism through its extensive actuator system.

The humanoid robot comparison reveals that while competitors excel in specific areas like mobility or industrial function, none match Elf V1’s commitment to emotional authenticity. The actuator count difference alone demonstrates the technical gap between Elf V1 and its nearest competitor in facial expression technology.

This technological advancement positions Elf V1 as the clear leader in applications requiring genuine human-robot emotional interaction. Industries ranging from healthcare to customer service can benefit from this enhanced emotional capability, as the robot’s ability to display authentic expressions creates more natural and comfortable interactions for users. The superior facial realism achieved through these 30 actuators represents a fundamental shift in how artificial intelligence systems can connect with humans on an emotional level.

Diverse Applications Span Healthcare, Education, and Entertainment Industries

Elf V1’s sophisticated emotional intelligence opens doors across multiple sectors where human connection drives success. The robot’s advanced facial actuator system creates authentic expressions that bridge the gap between human and machine interaction.

Healthcare and Companion Services Transform Patient Care

Healthcare environments benefit significantly from Elf V1’s empathetic capabilities. I’ve observed how emotional support becomes crucial for elderly patients in care facilities, where loneliness often impacts recovery rates. The robot’s ability to recognize and respond to human emotions makes it an ideal companion for seniors who need consistent emotional interaction.

Elder care facilities can deploy these robots to provide 24/7 companionship, offering comfort during difficult moments and celebrating positive experiences with patients. The robot’s facial expressions adapt to match appropriate emotional responses, creating genuine connections that traditional care methods can’t replicate consistently.

Customer service applications also leverage Elf V1’s emotional intelligence effectively. Front-line positions require staff who can read customer frustration, provide reassurance, and maintain positive interactions throughout challenging conversations. The robot excels in these scenarios because its facial actuators produce nuanced expressions that customers perceive as genuine empathy.

Educational and Entertainment Applications Drive Innovation

Educational settings present another promising application area for emotionally intelligent robotics. Teachers and tutors who can provide non-verbal encouragement often achieve better learning outcomes with students. Elf V1’s responsive facial expressions offer immediate feedback that helps students stay engaged and motivated during lessons.

Special education programs particularly benefit from this technology, as students with autism spectrum disorders often respond well to consistent, predictable emotional cues. The robot can maintain patience and provide appropriate emotional support without experiencing fatigue or frustration.

Entertainment industries are exploring Elf V1’s potential as realistic performers in theme parks, theaters, and immersive experiences. Traditional animatronics lack the emotional depth that modern audiences expect from interactive characters. The robot’s sophisticated expression system creates believable performances that enhance storytelling and audience engagement.

Film and television productions can utilize these robots for scenes requiring consistent emotional responses across multiple takes. Directors appreciate the precision control over facial expressions, eliminating the variability that human actors sometimes introduce during lengthy production schedules.

Artificial intelligence advances continue expanding these applications as developers refine emotional recognition algorithms and improve response accuracy across different cultural contexts and social situations.

Mixed Public Reception Reflects Growing Chinese Robotics Leadership

Public response to Elf V1 has been decidedly mixed, with viral clips sparking both fascination and unease among viewers worldwide. Many observers have drawn comparisons to the lifelike androids featured in HBO’s ‘Westworld’, describing the robot as simultaneously impressive and unsettling. The reactions highlight the classic ‘uncanny valley’ phenomenon, where humanoid robots appear close enough to human to trigger discomfort rather than acceptance.

Social media platforms have buzzed with commentary ranging from excitement about technological progress to concerns about the robot’s eerily human-like expressions. Some viewers have specifically noted how the 30 facial actuators create movements that feel almost too realistic, pushing the boundaries of what people expect from mechanical beings. Despite the mixed reactions, the viral nature of these clips demonstrates the significant public interest in advanced artificial intelligence developments.

China’s Strategic Position in Advanced Robotics

The development of Elf V1 represents a significant milestone in China’s robotics leadership, particularly in the transition from purely functional robots to socially interactive humanoid machines. While many international robotics companies have focused primarily on physical agility and industrial applications, Chinese developers have made substantial investments in emotional intelligence and human-robot interaction capabilities.

This strategic focus on social robotics positions China at the forefront of next-generation humanoid development. The integration of AI chatbot technology with sophisticated facial expressions creates possibilities for applications ranging from customer service to elderly care. Chinese companies have demonstrated their ability to combine hardware innovation with software sophistication, creating robots that can engage humans on both practical and emotional levels.

Market Implications and Future Development

The Chinese robotics market has shown remarkable growth in recent years, with government support and private investment driving rapid advancement in humanoid technology. Elf V1’s capabilities suggest that Chinese manufacturers are moving beyond copying existing designs to creating genuinely innovative products that push technological boundaries.

The mixed public reception actually serves as valuable market research, providing developers with insights into consumer comfort levels and preferences for future iterations. As smart technology continues evolving, understanding public acceptance becomes crucial for successful commercial deployment. The fact that a robot technology can generate such widespread discussion indicates the significant potential impact these machines may have on society.

Sources:

Interesting Engineering – China Builds Humanoid Robot With Realistic Eye Movements, Bionic Skin

AheadForm – (company website)

Ground News – Chinese Startup Unveils Hyperrealistic Humanoid Robot

YouTube – (video titled “kalil40”)

Mashable – Chinese Robotics Company ‘Westworld’ Robot Face